Power law

A power law is a special kind of mathematical relationship between two quantities. When the frequency of an event varies as a power of some attribute of that event (e.g. its size), the frequency is said to follow a power law. For instance, the number of cities having a certain population size is found to vary as a power of the size of the population, and hence follows a power law. There is evidence that the distributions of a wide variety of physical, biological, and man-made phenomena follow a power law, including the sizes of earthquakes, craters on the moon and of solar flares,[1] the foraging pattern of various species,[2] the sizes of activity patterns of neuronal populations,[3] the frequencies of words in most languages, frequencies of family names, the sizes of power outages and wars,[4] and many other quantities. It also underlies the "80/20 rule" or Pareto distribution governing the distribution of income or wealth within a population.

Contents

|

Properties of power laws

Scale invariance

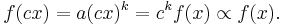

The main property of power laws that makes them interesting is their scale invariance. Given a relation  , scaling the argument

, scaling the argument  by a constant factor

by a constant factor  causes only a proportionate scaling of the function itself. That is,

causes only a proportionate scaling of the function itself. That is,

That is, scaling by a constant  simply multiplies the original power-law relation by the constant

simply multiplies the original power-law relation by the constant  . Thus, it follows that all power laws with a particular scaling exponent are equivalent up to constant factors, since each is simply a scaled version of the others. This behavior is what produces the linear relationship when logarithms are taken of both

. Thus, it follows that all power laws with a particular scaling exponent are equivalent up to constant factors, since each is simply a scaled version of the others. This behavior is what produces the linear relationship when logarithms are taken of both  and

and  , and the straight-line on the log-log plot is often called the signature of a power law. With real data, such straightness is necessary, but not a sufficient condition for the data following a power-law relation. In fact, there are many ways to generate finite amounts of data that mimic this signature behavior, but, in their asymptotic limit, are not true power laws. Thus, accurately fitting and validating power-law models is an active area of research in statistics.

, and the straight-line on the log-log plot is often called the signature of a power law. With real data, such straightness is necessary, but not a sufficient condition for the data following a power-law relation. In fact, there are many ways to generate finite amounts of data that mimic this signature behavior, but, in their asymptotic limit, are not true power laws. Thus, accurately fitting and validating power-law models is an active area of research in statistics.

Universality

The equivalence of power laws with a particular scaling exponent can have a deeper origin in the dynamical processes that generate the power-law relation. In physics, for example, phase transitions in thermodynamic systems are associated with the emergence of power-law distributions of certain quantities, whose exponents are referred to as the critical exponents of the system. Diverse systems with the same critical exponents—that is, which display identical scaling behaviour as they approach criticality—can be shown, via renormalization group theory, to share the same fundamental dynamics. For instance, the behavior of water and CO2 at their boiling points fall in the same universality class because they have identical critical exponents. In fact, almost all material phase transitions are described by a small set of universality classes. Similar observations have been made, though not as comprehensively, for various self-organized critical systems, where the critical point of the system is an attractor. Formally, this sharing of dynamics is referred to as universality, and systems with precisely the same critical exponents are said to belong to the same universality class.

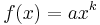

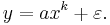

Power-law functions

The general power-law function follows the polynomial form given above, and is a ubiquitous form throughout mathematics and science. Notably, however, not all polynomial functions are power laws because not all polynomials exhibit the property of scale invariance. Typically, power-law functions are polynomials in a single variable, and are explicitly used to model the scaling behavior of natural processes. For instance, allometric scaling laws for the relation of biological variables are some of the best known power-law functions in nature. In this context, the  term is most typically replaced by a deviation term

term is most typically replaced by a deviation term  , which can represent uncertainty in the observed values (perhaps measurement or sampling errors) or provide a simple way for observations to deviate from the power-law function (perhaps for stochastic reasons):

, which can represent uncertainty in the observed values (perhaps measurement or sampling errors) or provide a simple way for observations to deviate from the power-law function (perhaps for stochastic reasons):

Scientific interest in power law relations stems partly from the ease with which certain general classes of mechanisms generate them (see the Sornette reference below). The demonstration of a power-law relation in some data can point to specific kinds of mechanisms that might underlie the natural phenomenon in question, and can indicate a deep connection with other, seemingly unrelated systems (see the reference by Simon and the subsection on universality below). The ubiquity of power-law relations in physics is partly due to dimensional constraints, while in complex systems, power laws are often thought to be signatures of hierarchy or of specific stochastic processes. A few notable examples of power laws are the Gutenberg-Richter law for earthquake sizes, Pareto's law of income distribution, structural self-similarity of fractals, and scaling laws in biological systems. Research on the origins of power-law relations, and efforts to observe and validate them in the real world, is an active topic of research in many fields of science, including physics, computer science, linguistics, geophysics, neuroscience, sociology, economics and more.

However much of the recent interest in power laws comes from the study of probability distributions: it's now known that the distributions of a wide variety of quantities seem to follow the power-law form, at least in their upper tail (large events). The behavior of these large events connects these quantities to the study of theory of large deviations (also called extreme value theory), which considers the frequency of extremely rare events like stock market crashes and large natural disasters. It is primarily in the study of statistical distributions that the name "power law" is used; in other areas the power-law functional form is more often referred to simply as a polynomial form or polynomial function.

Examples of power-law functions

- The Stevens' power law of psychophysics

- The Stefan–Boltzmann law

- The Ramberg–Osgood stress–strain relationship

- The input-voltage–output-current curves of field-effect transistors and vacuum tubes approximate a square-law relationship, a factor in "tube sound".

- A 3/2-power law can be found in the plate characteristic curves of triodes.

- The inverse-square laws of Newtonian gravity and electrostatics

- Electrostatic potential and gravitational potential

- Model of van der Waals force

- Force and potential in simple harmonic motion

- Kepler's third law

- The initial mass function

- Gamma correction relating light intensity with voltage

- Kleiber's law relating animal metabolism to size, and allometric laws in general

- Behaviour near second-order phase transitions involving critical exponents

- Proposed form of experience curve effects

- The differential energy spectrum of cosmic-ray nuclei

- Square-cube law (ratio of surface area to volume)

- Constructal law

- Fractals

- The Pareto principle also called the "80–20 rule"

- Zipf's law in corpus analysis and population distributions amongst others, where frequency of an item or event is inversely proportional to its frequency rank (i.e. the second most frequent item/event occurring half as often the most frequent item and so on).

- The safe operating area relating to maximum simultaneous current and voltage in power semiconductors.

Variants

Broken power law

A broken power law is defined with a threshold:

for

for  ,

, for

for  .

.

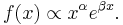

Power law with exponential cutoff

A power law with an exponential cutoff is simply a power law multiplied by an exponential function:

Curved power law

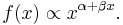

Power-law probability distributions

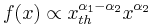

In the most general sense, a power-law probability distribution is a distribution whose density function (or mass function in the discrete case) has the form

where  , and

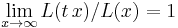

, and  is a slowly varying function, which is any function that satisfies

is a slowly varying function, which is any function that satisfies  with

with  constant. This property of

constant. This property of  follows directly from the requirement that

follows directly from the requirement that  be asymptotically scale invariant; thus, the form of

be asymptotically scale invariant; thus, the form of  only controls the shape and finite extent of the lower tail. For instance, if

only controls the shape and finite extent of the lower tail. For instance, if  is the constant function, then we have a power-law that holds for all values of

is the constant function, then we have a power-law that holds for all values of  . In many cases, it is convenient to assume a lower bound

. In many cases, it is convenient to assume a lower bound  from which the law holds. Combining these two cases, and where

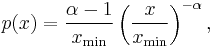

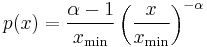

from which the law holds. Combining these two cases, and where  is a continuous variable, the power law has the form

is a continuous variable, the power law has the form

where the pre-factor to  is the normalizing constant. We can now consider several properties of this distribution. For instance, its moments are given by

is the normalizing constant. We can now consider several properties of this distribution. For instance, its moments are given by

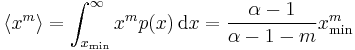

which is only well defined for  . That is, all moments

. That is, all moments  diverge: when

diverge: when  , the average and all higher-order moments are infinite; when

, the average and all higher-order moments are infinite; when  , the mean exists, but the variance and higher-order moments are infinite, etc. For finite-size samples drawn from such distribution, this behavior implies that the central moment estimators (like the mean and the variance) for diverging moments will never converge - as more data is accumulated, they continue to grow. These power-law probability distributions are also called Pareto-type distributions, distributions with Pareto tails, or distributions with regularly varying tails.

, the mean exists, but the variance and higher-order moments are infinite, etc. For finite-size samples drawn from such distribution, this behavior implies that the central moment estimators (like the mean and the variance) for diverging moments will never converge - as more data is accumulated, they continue to grow. These power-law probability distributions are also called Pareto-type distributions, distributions with Pareto tails, or distributions with regularly varying tails.

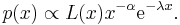

Another kind of power-law distribution, which does not satisfy the general form above, is the power law with an exponential cutoff

In this distribution, the exponential decay term  eventually overwhelms the power-law behavior at very large values of

eventually overwhelms the power-law behavior at very large values of  . This distribution does not scale and is thus not asymptotically a power law; however, it does approximately scale over a finite region before the cutoff. (Note that the pure form above is a subset of this family, with

. This distribution does not scale and is thus not asymptotically a power law; however, it does approximately scale over a finite region before the cutoff. (Note that the pure form above is a subset of this family, with  .) This distribution is a common alternative to the asymptotic power-law distribution because it naturally captures finite-size effects. For instance, although the Gutenberg–Richter law is commonly cited as an example of a power-law distribution, the distribution of earthquake magnitudes cannot scale as a power law in the limit

.) This distribution is a common alternative to the asymptotic power-law distribution because it naturally captures finite-size effects. For instance, although the Gutenberg–Richter law is commonly cited as an example of a power-law distribution, the distribution of earthquake magnitudes cannot scale as a power law in the limit  because there is a finite amount of energy in the Earth's crust and thus there must be some maximum size to an earthquake. As the scaling behavior approaches this size, it must taper off.

because there is a finite amount of energy in the Earth's crust and thus there must be some maximum size to an earthquake. As the scaling behavior approaches this size, it must taper off.

Graphical methods for the identification of power-law probability distributions from random samples

Although more sophisticated and robust methods have been proposed, the most frequently used graphical methods of identifying power-law probability distributions using random samples are Pareto quantile-quantile plots (or Pareto Q-Q plots), mean residual life plots (see, e.g., the books by Beirlant et al.[5] and Coles [6]) and log-log plots. Another, more robust graphical method uses bundles of residual quantile functions.[7] (Please keep in mind that power-law distributions are also called Pareto-type distributions.) It is assumed here that a random sample is obtained from a probability distribution, and that we want to know if the tail of the distribution follows a power-law (in other words, we want to know if the distribution has a "Pareto tail"). Here, the random sample is called "the data".

Pareto Q-Q plots compare the quantiles of the log-transformed data to the corresponding quantiles of an exponential distribution with mean 1 (or to the quantiles of a standard Pareto distribution) by plotting the former versus the latter. If the resultant scatterplot suggests that the plotted points " asymptotically converge" to a straight line, then a power-law distribution should be suspected. A limitation of Pareto Q-Q plots is that they behave poorly when the tail index  (also called Pareto index) is close to 0, because Pareto Q-Q plots are not designed to identify distributions with slowly varying tails.[7]

(also called Pareto index) is close to 0, because Pareto Q-Q plots are not designed to identify distributions with slowly varying tails.[7]

On the other hand, in its version for identifying power-law probability distributions, the mean residual life plot consists of first log-transforming the data, and then plotting the average of those log-transformed data that are higher than the i-th order statistic versus the i-th order statistic, for all i=1,...,n, where n is the size of the random sample. If the resultant scatterplot suggests that the plotted points tend to "stabilize" about a horizontal straight line, then a power-law distribution should be suspected. Since the mean residual life plot is very sensitive to outliers (it is not robust), it usually produces plots that are difficult to interpret; for this reason, such plots are usually called Hill horror plots [8]

Log-log plots are an alternative way of graphically examining the tail of a distribution using a random sample. This method consists of plotting the logarithm of an estimator of the probability that a particular number of the distribution occurs versus the logarithm of that particular number. Usually, this estimator is the proportion of times that the number occurs in the data set. If the points in the plot tend to "converge" to a straight line for large numbers in the x axis, then the researcher concludes that the distribution has a power-law tail. An example of the application of these types of plot can be found, for instance, in Jeong et al.[9] A disadvantage of this plots is that, in order for them to provide reliable results, they require huge amounts of data. In addition, they are appropriate only for discrete (or grouped) data.

Another graphical method for the identification of power-law probability distributions using random samples has been proposed.[7] This methodology consists of plotting a bundle for the log-transformed sample. Originally proposed as a tool to explore the existence of moments and the moment generation function using random samples, the bundle methodology is based on residual quantile functions (RQFs), also called residual percentile functions,[10][11][12][13][14][15][16] which provide a full characterization of the tail behavior of many well-known probability distributions, including power-law distributions, distributions with other types of heavy tails, and even non-heavy-tailed distributions. Bundle plots do not have the disadvantages of Pareto Q-Q plots, mean residual life plots and log-log plots mentioned above (they are robust to outliers, allow visually identifying power-laws with small values of  , and do not demand the collection of much data). In addition, other types of tail behavior can be identified using bundle plots.

, and do not demand the collection of much data). In addition, other types of tail behavior can be identified using bundle plots.

Plotting power-law distributions

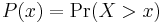

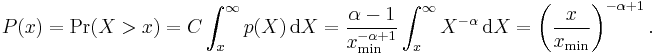

In general, power-law distributions are plotted on doubly logarithmic axes, which emphasizes the upper tail region. The most convenient way to do this is via the (complementary) cumulative distribution (cdf),  ,

,

Note that the cdf is also a power-law function, but with a smaller scaling exponent. For data, an equivalent form of the cdf is the rank-frequency approach, in which we first sort the  observed values in ascending order, and plot them against the vector

observed values in ascending order, and plot them against the vector ![\left[1,\frac{n-1}{n},\frac{n-2}{n},\dots,\frac{1}{n}\right]](/2012-wikipedia_en_all_nopic_01_2012/I/bc687c349e1bc9bd909b7e3a33a4aa33.png) .

.

Although it can be convenient to log-bin the data, or otherwise smooth the probability density (mass) function directly, these methods introduce an implicit bias in the representation of the data, and thus should be avoided. The cdf, on the other hand, introduces no bias in the data and preserves the linear signature on doubly logarithmic axes.

Estimating the exponent from empirical data

There are many ways of estimating the value of the scaling exponent for a power-law tail, however not all of them yield unbiased and consistent answers. Some of the most reliable techniques are often based on the method of maximum likelihood. Alternative methods are often based on making a linear regression on either the log-log probability, the log-log cumulative distribution function, or on log-binned data, but these approaches should be avoided as they can all lead to highly biased estimates of the scaling exponent (see the Clauset et al. reference below).

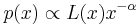

Maximum likelihood

For real-valued, independent and identically distributed data, we fit a power-law distribution of the form

to the data  , where the coefficient

, where the coefficient  is included to ensure that the distribution is normalized. Given a choice for

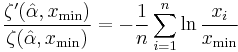

is included to ensure that the distribution is normalized. Given a choice for  , a simple derivation by this method yields the estimator equation

, a simple derivation by this method yields the estimator equation

where  are the

are the  data points

data points  . (For a more detailed derivation, see Hall or Newman below.) This estimator exhibits a small finite sample-size bias of order

. (For a more detailed derivation, see Hall or Newman below.) This estimator exhibits a small finite sample-size bias of order  , which is small when n > 100. Further, the uncertainty in the estimation can be derived from the maximum likelihood argument, and has the form

, which is small when n > 100. Further, the uncertainty in the estimation can be derived from the maximum likelihood argument, and has the form  . This estimator is equivalent to the popular Hill estimator from quantitative finance and extreme value theory.

. This estimator is equivalent to the popular Hill estimator from quantitative finance and extreme value theory.

For a set of n integer-valued data points  , again where each

, again where each  , the maximum likelihood exponent is the solution to the transcendental equation

, the maximum likelihood exponent is the solution to the transcendental equation

where  is the incomplete zeta function. The uncertainty in this estimate follows the same formula as for the continuous equation. However, the two equations for

is the incomplete zeta function. The uncertainty in this estimate follows the same formula as for the continuous equation. However, the two equations for  are not equivalent, and the continuous version should not be applied to discrete data, nor vice versa.

are not equivalent, and the continuous version should not be applied to discrete data, nor vice versa.

Further, both of these estimators require the choice of  . For functions with a non-trivial

. For functions with a non-trivial  function, choosing

function, choosing  too small produces a significant bias in

too small produces a significant bias in  , while choosing it too large increases the uncertainty in

, while choosing it too large increases the uncertainty in  , and reduces the statistical power of our model. In general, the best choice of

, and reduces the statistical power of our model. In general, the best choice of  depends strongly on the particular form of the lower tail, represented by

depends strongly on the particular form of the lower tail, represented by  above.

above.

More about these methods, and the conditions under which they can be used, can be found in the Clauset et al. reference below. Further, this comprehensive review article provides usable code (Matlab, R and C++) for estimation and testing routines for power-law distributions.

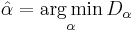

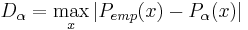

Kolmogorov–Smirnov estimation

Another method for the estimation of the power law exponent, which does not assume independent and identically distributed (iid) data, uses the minimization of the Kolmogorov–Smirnov statistic,  , between the cumulative distribution functions of the data and the power law:

, between the cumulative distribution functions of the data and the power law:

with

where  and

and  denote the cdfs of the data and the power law with exponent

denote the cdfs of the data and the power law with exponent  , respectively. As this method does not assume iid data, it provides an alternative way to determine the power law exponent for data sets in which the temporal correlation can not be ignored.[3]

, respectively. As this method does not assume iid data, it provides an alternative way to determine the power law exponent for data sets in which the temporal correlation can not be ignored.[3]

Two point fitting method

This criterion can be applied for the estimation of power law exponent in the case of scale free distributions and provides a more convergent estimate than the maximum likelihood method. The method is described in Guerriero et al. (2011) where it has been applied to study probability distributions of fracture aperture. In some contexts the probability distribution is described, not by the cumulative distribution function, by the cumulative frequency of a property X, defined as the number of elements per meter (or area unit, second etc.) for which X > x applies, where x is a variable real number. As an example, the cumulative distribution of the fracture aperture, X, for a sample of N elements is defined as 'the number of fractures per meter having aperture greater than x '. Use of cumulative frequency has some advantages, e.g. it allows one to put on the same diagram data gathered from sample lines of different lengths at different scales (e.g. from outcrop and from microscope).

Examples of power-law distributions

- Pareto distribution (continuous)

- Zeta distribution (discrete)

- Yule–Simon distribution (discrete)

- Student's t-distribution (continuous), of which the Cauchy distribution is a special case

- Zipf's law and its generalization, the Zipf–Mandelbrot law (discrete)

- The scale-free network model

- Bibliograms

- Neuronal avalanches[3]

- Horton's laws describing river systems

- Richardson's Law for the severity of violent conflicts (wars and terrorism)

- Population of cities

- Numbers of religious adherents

- Frequency of words in a text

- Pink noise

- 90–9–1 principle on wikis

A great many power-law distributions have been conjectured in recent years. For instance, power laws are thought to characterize the behavior of the upper tails for the popularity of websites, the degree distribution of the webgraph, describing the hyperlink structure of the WWW, the net worth of individuals, the number of species per genus, the popularity of given names, Gutenberg–Richter law of earthquake magnitudes, the size of financial returns, and many others. However, much debate remains as to which of these tails are actually power-law distributed and which are not. For instance, it is commonly accepted now that the famous Gutenberg–Richter law decays more rapidly than a pure power-law tail because of a finite exponential cutoff in the upper tail.

Validating power laws

Although power-law relations are attractive for many theoretical reasons, demonstrating that data do indeed follow a power-law relation requires more than simply fitting a particular model to the data. In general, many alternative functional forms can appear to follow a power-law form for some extent (see the Laherrere and Sornette reference below). Also, researchers usually have to face the problem of deciding whether or not a real-world probability distribution follows a power law. As a solution to this problem, Diaz[7] proposed a graphical methodology based on random samples that allow visually discerning between different types of tail behavior. This methodology uses bundles of residual quantile functions, also called percentile residual life functions, which characterize many different types of distribution tails, including both heavy and non-heavy tails.

A method for validation of power-law relations is by testing many orthogonal predictions of a particular generative mechanism against data. Simply fitting a power-law relation to a particular kind of data is not considered a rational approach. As such, the validation of power-law claims remains a very active field of research in many areas of modern science.[4]

See also

Notes

- ^ Newman, M. E. J. (2005). "Power laws, Pareto distributions and Zipf's law". Contemporary Physics 46 (5): 323–351. doi:10.1080/00107510500052444.

- ^ Humphries NE, Queiroz N, Dyer JR, Pade NG, Musyl MK, Schaefer KM, Fuller DW, Brunnschweiler JM, Doyle TK, Houghton JD, Hays GC, Jones CS, Noble LR, Wearmouth VJ, Southall EJ, Sims DW (2010). "Environmental context explains Lévy and Brownian movement patterns of marine predators". Nature 465 (7301): 1066–1069. doi:10.1038/nature09116. PMID 20531470.

- ^ a b c Klaus A, Yu S, Plenz D (2011). Zochowski, Michal. ed. "Statistical Analyses Support Power Law Distributions Found in Neuronal Avalanches". PLoS ONE 6 (5): e19779. doi:10.1371/journal.pone.0019779. PMC 3102672. PMID 21720544. http://www.plosone.org/article/info%3Adoi%2F10.1371%2Fjournal.pone.0019779.

- ^ a b Aaron Clauset, Cosma Rohilla Shalizi, M. E. J. Newman (2009). "Power-law distributions in empirical data". SIAM Review 51 (4): 661–703. arXiv:0706.1062v2. doi:10.1137/070710111.

- ^ Beirlant, J., Teugels, J. L., Vynckier, P. (1996a), Practical Analysis of Extreme Values, Leuven: Leuven University Press

- ^ Coles, S. (2001) An introduction to statistical modeling of extreme values. Springer-Verlag, London.

- ^ a b c d Diaz F. J. (1999). "Identifying Tail Behavior by Means of Residual Quantile Functions". Journal of Computational and Graphical Statistics 8 (3): 493–509. doi:10.2307/1390871.

- ^ Resnick, S. I. (1997), Heavy Tail Modeling and TeΔletraffic Data, The Annals of Statistics, 25, 1805-1869.

- ^ Jeong H, Tombor B. Albert, Oltvai Z.N., Barabasi A.-L. (2000). "The large-scale organization of metabolic networks". Nature 407 (6804): 651–654. doi:10.1038/35036627. PMID 11034217.

- ^ Arnold, B. C., Brockett, P. L. (1983), When does the βth percentile residual life function determine the distribution?, Operations Research 31, no. 2, Operations Research Society of America, 391–396.

- ^ Joe, H., Proschan, F. (1984), Percentile residual life functions, Operations Research 32, no. 3, Operations Research Society of America, 668–678.

- ^ Joe, H. (1985), Characterizations of life distributions from percentile residual lifetimes, Ann. Inst. Statist. Math. 37, Part A, 165–172.

- ^ Csorgo, S., Viharos, L. (1992), Confidence bands for percentile residual lifetimes, Journal of Statistical Planning and Inference 30, North-Holland, 327–337.

- ^ Schmittlein, D. C., Morrison, D. G. (1981), The median residual lifetime: A characterization theorem and an application, Operations Research 29, no. 2, Operations Research Society of America, 392–399.

- ^ Morrison, D. G., Schmittlein, D. C. (1980), Jobs, strikes, and wars: Probability models for duration, Organizational Behavior and Human Performance 25, Academic Press, Inc., 224–251.

- ^ Gerchak, Y. (1984), Decreasing failure rates and related issues in the social sciences, Operations Research 32, no. 3, Operations Research Society of America, 537–546.

Bibliography

- Clauset, A., Shalizi, C. R. and Newman, M. E. J. (2009). "Power-law distributions in empirical data". SIAM Review 51 (4): 661–703. arXiv:0706.1062. doi:10.1137/070710111.

- V. Guerriero, S. Vitale, S. Ciarcia, S. Mazzoli (2011). "Improved statistical multi-scale analysis of fractures in carbonate reservoir analogues". Tectonophysics (Elsevier) 504: 14–24. doi:10.1016/j.tecto.2011.01.003.

- Hall, P. (1982). "On Some Simple Estimates of an Exponent of Regular Variation". Journal of the Royal Statistical Society, Series B (Methodological) 44 (1): 37–42. JSTOR 2984706.

- Laherrere, J. and D. Sornette (1998). "Stretched exponential distributions in Nature and Economy: 'Fat tails' with characteristic scales". European Physical Journal B 2 (4): 525–539. arXiv:cond-mat/9801293. doi:10.1007/s100510050276.

- Mitzenmacher, M. (2003). "A brief history of generative models for power law and lognormal distributions". Internet Mathematics 1: 226–251. http://www.internetmathematics.org/volumes/1/2/pp226_251.pdf.

- Newman, M. E. J. (2005). "Power laws, Pareto distributions and Zipf's law". Contemporary Physics 46 (5): 323–351. arXiv:cond-mat/0412004. doi:10.1080/00107510500052444.

- "Theory of Zipf's law and beyond", Alexander Saichev, Yannick Malevergne and Didier Sornette (2009) Lecture Notes in Economics and Mathematical Systems, Volume 632, Springer (November 2009), ISBN 978-3-642-02945-5

- Simon, H. A. (1955). "On a Class of Skew Distribution Functions". Biometrika 42 (3/4): 425–440. doi:10.2307/2333389. JSTOR 2333389.

- Critical Phenomena in Natural Sciences (Chaos, Fractals, Self-organization and Disorder: Concepts and Tools), Didier Sornette (2006) 2nd ed., 2nd print (Springer Series in Synergetics, Heidelberg).

- Ubiquity Mark Buchanan (2000) Wiedenfield & Nicholson ISBN 0 297 64376 2

External links

- Zipf's law

- Zipf, Power-laws, and Pareto - a ranking tutorial

- Gutenberg-Richter Law

- Stream Morphometry and Horton's Laws

- Clay Shirky on Institutions & Collaboration: Power law in relation to the internet-based social networks

- Clay Shirky on Power Laws, Weblogs, and Inequality

- "How the Finance Gurus Get Risk All Wrong" by Benoit Mandelbrot & Nassim Nicholas Taleb. Fortune, July 11, 2005.

- "Million-dollar Murray": power-law distributions in homelessness and other social problems; by Malcolm Gladwell. The New Yorker, February 13, 2006.

- Benoit Mandelbrot & Richard Hudson: The Misbehaviour of Markets (2004)

- Philip Ball: Critical Mass: How one thing leads to another (2005)

- Tyranny of the Power Law from The Econophysics Blog

- So You Think You Have a Power Law — Well Isn't That Special? from Three-Toed Sloth, the blog of Cosma Shalizi, Professor of Statistics at Carnegie-Mellon University.

- Simple MATLAB script which bins data to illustrate power-law distributions (if any) in the data.

- The Erdős Webgraph Server visualizes the distribution of the degrees of the webgraph on the download page.

![\hat{\alpha} = 1 %2B n \left[ \sum_{i=1}^n \ln \frac{x_i}{x_\min} \right]^{-1}](/2012-wikipedia_en_all_nopic_01_2012/I/fe09ec9f979040234cd0535462e8a9d2.png)